📅 Posted 2018-08-03

So I was thinking the other day, how long have I been working on Koi CMS?

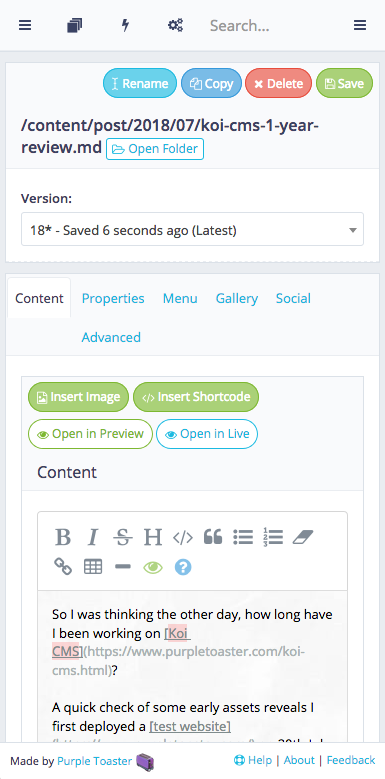

A quick check of some early assets reveals I first deployed a test website on 20th July 2017 but the real “Ed” infrastructure went up 26th July 2017, followed by other data storage (aka S3 buckets) on the 27th.

Let’s call 26th July 2017 the birth of Koi.

In actual truth, I had a go at building Koi way back in 2015 but didn’t get very far. I first registered the domain for Koi strangely almost exactly 3 years from now, but I grew bored of the idea pretty quickly.

Boy, the last 12 months have been fruitful in comparison!

What have I learnt so far?

Let’s break it down into a few different areas. I’ll describe a lot of my experiences with AWS components since they make up 99% of the infrastructure required.

My role(s)

It’s quite liberating being the product guy, support, devops, front end dev, back end dev, architect, tester, … well there is a lot of work involved in building a CMS and doing “all the things” does create some problems. One example is I’d love to make my code perfect but I just can’t given the timeframe, so there are probably some bits of code that a decent JS developer would just cry over. But you know, priorities and all that.

What do they say? Working software? Well, it mostly works, anyway!

What’s in a name?

I started with Koi Pond mainly because koipond.io was available. First capital purchase! But after some early feedback about Koi Pond feeling a bit awkard on the tongue (combined with everyone basically just calling it “Koi”), I figured I’d just stick to what users call it. Thanks to the beta testers for this one!

As for the name, Koi are pretty and I’ve got a few photos of Koi Ponds which I took whilst on holidays, so good memories. Plus I didn’t realise at first, but since a lot of my clients are dentists, they typically have fish tanks so that matches as well… but normally filled with tropical fish and not Koi. Ah well, can’t have it all.

How about some more technical learnings?

DynamoDB

For infrastructure where every cent counts, having mandatory Cloudwatch alarms for every table and every index you create can make things quite costly, compared to other solutions. I was surprised that the alarms cost more than the storage + hourly access feeds.

I also discovered that reservations cost you money every hour even if you don’t allocate all reserved capacity, so make sure to either use the scaling (which I’m told isn’t reactive enough) or allocate every scrap of reserved capacity to something. You might as well.

I felt like having to create indexes for each use-case felt a little more painful than SQL. At least with SQL, you could consider indexes if you wanted to tweak performance once you were going down a particular direction. Play with DynamoDB and you had to be pretty sure of what you wanted in an index before creating one. Global Secondary Indexes were also slightly complicated when scripting it up using the AWS CLI to get the syntax 100% spot-on, but not impossible. It’s something which is “once right, never touched”.

Finally, I regret forgetting to include the Git branch name in a lot of deployed components, so the inability to easily rename tables is annoying but not completely unsolvable, with a bit of data transfer from one table to another. At least the tables are namespaced, just not in the most perfect way that I would like to use right now. I can imagine that if you had a larger app with many more tables, things could become a pain to keep track of.

S3 + CloudFront

Nothing to complain about here, in fact the combo works rather nicely… but the migration from easy “S3 Web Hosting” to strangely-more-difficult plain S3 fronted by CloudFront meant that I could take advantage of Origin Access Identities which just felt like a good thing to do. This didn’t come without it’s challenges. It was only possible with additional work to make sure 301s, 404s and the like are still possible. Enter Lambda@Edge… Which is it’s own beast of course. The publishing of new versions and making sure all CloudFront distributions use the correct published version is scriptable but a complication I could definitely do without.

Storing versioning in S3 appears really powerful on the surface because it can help manage changes, rollbacks and deletes. Fat fingers rejoice! But I’m not entirely sure that it’s a great idea if you wanted to sync or clone a complete bucket - history and all - to another bucket. You can do it, but it doesn’t feel as native as it should be.

Oh, and I’d like to rename some buckets because I’ve changed my mind on how I should name them, but I’ll live with the original names.

Lambda@Edge

This ended up being 100% instrumental to making it all work together. Without a Lambda function tied to Origin Responses, I wouldn’t be able to set up 301 redirects, mask S3 metadata from leaking through headers and add headers for HSTS et al. I did go a little too wild on the Strict Transport Security controls as well - turns out some of my clients have additional services on subdomains which aren’t secured under HTTPS (tisk, tisk!) but that’s out of my control, so throwing IncludeSubdomains; too liberally was an early mistake which had to be relaxed at one point.

Lambda

Probably the only thing worthy of mention here is the risk of running out of disk space (see my blog entry on that matter). Oh, and the fact that there are a billion ways to deploy Lambda functions, so I seem to spend a fair chunk of time trying one method and then moving on, rather than building new features. I guess it’s a form of procrastination!

API Gateway

My only gripe here is when deploying a fresh Swagger spec, everything comes across nicely EXCEPT for the permission for API Gateway to invoke Lambda functions. I can see why this is the case, but the extra step means cloning APIs isn’t as easy as it should be. Also, the console has some minor bug where deleting header values that should be returned are left behind in the config as a bit of an orphan. Easily removed from the swagger, but it’s amusing it’s still hidden away there behind the scenes somewhere.

General billing with AWS

It’s tricky to make sense of AWS billing at times, but overall reasonable value at the end of the day. I think a lot of companies struggle with reporting the cost of running specific pieces of infrastructure. The serverless model means some things (like Route53 or Cloudwatch Alarms) make up most of the cost of running the system, which is amusing at best. Find out a bit more about my costs here.

My only cash splash in 12 months was deploying a second “test” environment, which helped a lot with pulling together a new release of features, whilst keeping the live system unaffected. Recommended!

Go, Hugo!

Frequent releases are great!

New features are great!

But… I really don’t have time to take advantage of all the new power that Hugo brings to be honest. But at least most of the upgrades have been seamless… with only one breaking change due to a bug with passing the theme on the command line. But there’s an easy workaround, so once that was in place, all was good.

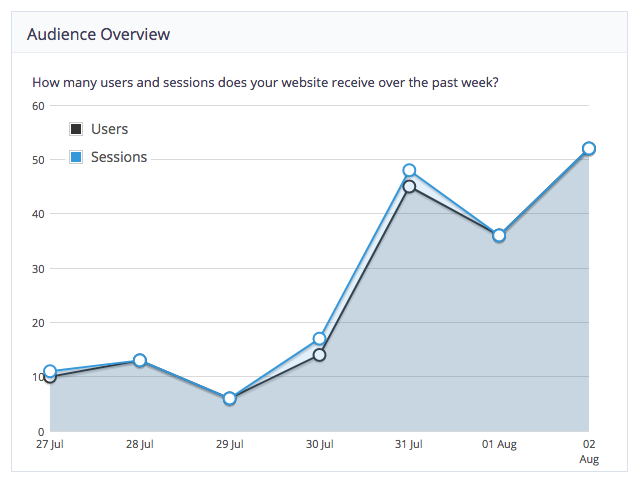

Google Analytics

It’s super-enabling to be able to edit content, then check out some stats, then flip to another site, and look at some other graphs, all without having to log into multiple systems. The graphs and stats are light (as far as decision making goes) but the convenience can’t be beat. The Google Analytics Reporting API was surprisingly easy to use and provides all the figures I could dream of for all sites on Koi. Even the editor can be graphed inside the editor, which is meta but such a convenience.

CircleCI

Definitely a powerful tool and great value on the “free” plan, CircleCI has allowed me to optimise the code-test-build-deploy pipeline. Storing the config as a YAML file in the repo in a branch is such a great way to version and deploy the build workflow that I’m surprised not every build system uses it. Or maybe they are just getting there, one day.

Small annoyances in the current state of the web

Cross-platform shortcut keys in browsers

Ow man, this one requires evaluation of muliple libraries, held back by mulit-platform testing PLUS they all seem to behave differently depending on the ‘focus’ of the cursor (eg within a regular text box, within SimpleMDE, etc.).

Viewports and responsive design

Trying to fit the Koi CMS Editor into a mobile phone viewport is really hard. Most of the features work, but there is a lot of detail lost when translated down to a small screen. I think I’ll need to spend a lot more time just removing things which don’t fit. In real-life, I don’t think any of my users use a mobile device for Koi (judging on the Analytics), but still I’d like it to work for myself at least!

What’s in the future?

Actually, there are quite a lot of outstanding tasks which I haven’t got around to yet, but this is either due to:

- Low overall value (not that interesting)

- Questionable complexity (lazy)

- Sounds like a good idea but nobody seems to want it yet (parked)

Some of the things I’d like to add over time (now that the foundation is there) includes:

- Role-based access control

- Better “Shortcodes” function to make it zero-code but still amazing to use

- Better and more severe linting

- More functional, API, contract, you-name-it testing in the pipeline

- Measurement for A/B test experiments

- Better image and gallery support, because everyone wants to upload a picture and it’s not the easiest at the moment

One last thing: what I would do differently

I’d probably re-design the structure of the Koi editor from the ground up, in a way that makes more sense to editors. This would completely ignore the structure that Hugo requires and instead think about it from the ground up. The “Publish” process would then convert whatever this structure is to the Hugo format and then call Hugo, which gives it a nice path to Publishing as well as an easier structure to deal with for editors.

Like this post? Subscribe to my RSS Feed or

Comments are closed