📅 Posted 2018-09-25

I haven’t had a tech post for a while so I figured it was about time I discussed one of many AWS services available today: Amazon Rekognition. It’s certainly an awkward word to type, but what can we do with it?

I added a ticket to my backlog, simply: “Do something interesting with AWS Rekognition”. This post is the result of doing something interesting!

I’m a bit lazy when it comes to adding alt text to images on my site and I know this has direct implications for SEO and accessibility. Perhaps I could fix this with the Amazon Rekognition Image API?

Some focus

I’m going to be looking at one particular feature of Amazon Rekognition, which is “Object and scene detection”. Amazon describe this as:

Rekognition automatically labels objects, concepts and scenes in your images, and provides a confidence score.

Setting it up

Amazon Rekognition brings a model that can interpret an image and return a list of concepts with confidence scores. This payload is in JSON and looks a bit like this:

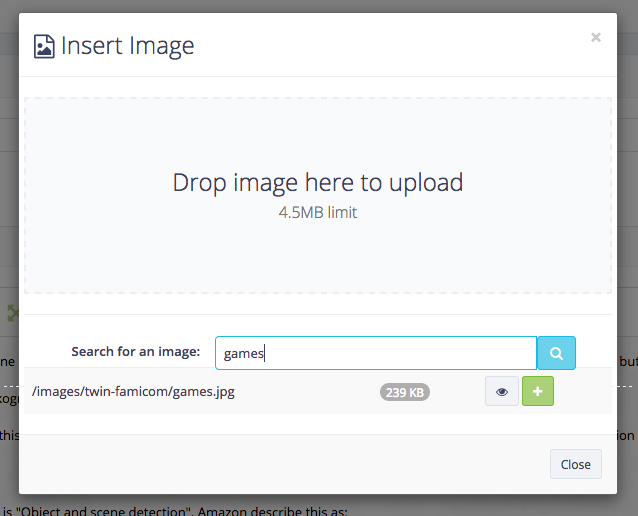

Given this image of a collection of random Famicom games in a box:

We get this response:

{

"Labels": [{

"Name": "Art",

"Confidence": 87.74759674072266

}, {

"Name": "Box",

"Confidence": 62.48863220214844

}, {

"Name": "Brochure",

"Confidence": 55.295753479003906

}, {

"Name": "Flyer",

"Confidence": 55.295753479003906

}, {

"Name": "Paper",

"Confidence": 55.295753479003906

}, {

"Name": "Poster",

"Confidence": 55.295753479003906

}, {

"Name": "Shop",

"Confidence": 53.21715545654297

}, {

"Name": "Ornament",

"Confidence": 50.9842643737793

}, {

"Name": "Tapestry",

"Confidence": 50.9842643737793

}]

}

If you inspect the image above in this blog, you can see an example of auto-generated alt text.

It’s pretty interesting to see a few different things here:

- It’s picked up there is a box in the image and there is also a lot of artwork.

- You could say that the labels on the game are flyers, brochures, paper and posters.

- Shop. Not sure about this one! But maybe I should be putting the games up for sale? Never!

- Tapestry. I actually love this one! It’s picked up my carpet, which is definitely woven.

So overall, an interesting result for this image. I could then convert this to an alt tag in the most simplest way possible, which would produce:

<img src="famicom.jpg" alt="Image of Art, Box, Brochure, Flyer, Paper, Poster, Shop, Ornament, Tapestry">

I’m not sure if this helps…

So what about text in images, then?

For this specific use-case, this might be a better avenue. So let’s try Amazon Rekognition’s “Text in image” feature for the same Famicom games photo. I’m going to cut this text down to the Reader’s Digest version, for clarity (as I don’t want the bounding boxes):

| Detected Text | Confidence |

|---|---|

| SUPER | 99.3436279296875 |

| USA | 99.0685043334961 |

| SUPER MARIO | 97.0722885131836 |

| JUNTOFT | 96.7414474487304 |

| JUNTOFT | 96.7414474487304 |

| Nintendo | 96.6177215576171 |

| HVC -MA USA | 96.0659790039062 |

| MADE | 95.5694580078125 |

| 1985 Nintendo | 95.4043273925781 |

| TETRIS2 | 95.3259506225586 |

| MARIO | 94.8009567260742 |

| -MA | 94.5947647094726 |

| HVC | 94.53466796875 |

| 30 | 94.3270721435546 |

| 30 | 94.3270721435546 |

| 1985 | 94.190933227539 |

| HVC- | 93.2226104736328 |

| MADE | 91.9750213623046 |

| Nintendo MADE | 91.7140808105468 |

| Nintendo | 91.4531478881836 |

| +BomBliss. | 90.6037216186523 |

| -SM | 90.5084991455078 |

| MADE IN | 90.5084457397461 |

| THERY | 90.4601211547851 |

I’ve set the threshold at greater than 90% confidence, although this is purely arbitrary based on what I was getting for this image. These results are a lot more useful for alt tags for this image than the generic object detection, but only because the image contained a heavy amount of text.

Deduping the words, now the alt tag could look like if we used the text detection model:

<img src="famicom.jpg" alt="SUPER, USA, SUPER MARIO, JUNTOFT, Nintendo, HVC -MA USA, MADE, 1985 Nintendo, TETRIS2, MARIO, -MA, HVC, 30, 1985, HVC-, Nintendo MADE, +BomBliss., -SM, MADE IN, THERY">

I think this is much better for this image example, but it may not be great for all images.

Writing a Lambda function

Having decided on evaluating the rather generic object detection model, here’s some basic code to get this running in a NodeJS based Lambda function. This is pretty much pass-thru, so it cuts down on validation and so forth for simplicity sake:

const AWS = require('aws-sdk');

exports.handler = (event, context, callback) => {

const rekognition = new AWS.Rekognition();

const params = {

Image: {

S3Object: {

Bucket: process.env.S3_SOURCE_BUCKET,

Name: event.key,

},

},

MaxLabels: 10,

MinConfidence: 50,

};

rekognition.detectLabels(params, (rekerr, rekdata) => {

if (rekerr) {

console.log(rekerr, rekerr.stack);

callback('Could not analyse file object');

} else {

console.log(`Response from Rekognition: ${rekdata}`);

callback(null, rekdata.Labels || []);

}

});

};

Adding it to API Gateway

Probably not the most exciting part of the job - in fact, if you aren’t using the “proxy” mode of API Gateway then you’re in for a real whole lot of configuration. Anyways, suffice to say that the Lambda function needs to be accessible from web clients, so after creating some mapping and error handling, the function is finally callable.

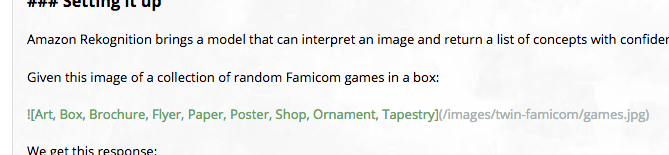

Adding the function to the page editor inside Koi CMS

This is not the most efficient way but it’s the easiest for the moment: call Amazon Rekognition every time an image is inserted into a page. It would be better to add the image analysis results as metadata on the image itself, but that can be a feature added at a later date. MVP and all, y’know. It works at this volume and if it becomes a popular feature, it can be optimised at a later date.

Giving it a try!

Firstly, let’s click the “Insert Image” button…

And after uploading the image, we get the following Markdown:

Fun fact, only the 1st image from the two images above was correctly identified as a “webpage”.

Some more examples

So we’ve seen Amazon Rekognition process a photo of Famicom games using two different techniques, but with a focus on detecting labels, let’s try a couple more real-world examples.

Image 1: Old Photo from Hawkesbury People and Places

This is F J Mortley’s Boot Palace (what a name!) and the results are:

Architecture, Building, Temple, Worship, House, Housing, Villa, Brick, Column, Pillar

I chalk this one up as a success, although the terms are super generic.

Image 2: Random Dentistry-Related Photo

Computer, Electronics, Laptop, Pc, Adapter, Connector, Electrical Device

So I do some work for dental websites and here’s a photo of a bite wing x-ray machine. There must be some strange init caps going on (“Pc”?) but otherwise this produces another set of generic concepts.

Image 3: Graphics

Let’s go for something a bit more left-field: let’s try an image which has been drawn as graphics, rather than taken as a photo.

This image has some filters and general fanciness applied to it.

Brochure, Flyer, Paper, Poster, Calendar, Text, Page

I start to get the feeling that “Brochure”, “Flyer” and “Poster” are returned quite a lot… and actually, seem quite semantically similar when you think about it.

Pricing

The pricing is as follows (as of writing, subject to change) for the Image API only (I didn’t look at video) and only for the Sydney region (as an example since that’s where I want my services):

| Image Analysis Tiers | Price per 1,000 Images Processed (USD) |

|---|---|

| First 1 million images processed per month | $1.30 |

| Next 9 million images processed per month | $1.00 |

| Next 90 million images processed per month | $0.80 |

| Over 100 million images processed per month | $0.50 |

Considering I have very few images, I’m probably only ever going to be up for about $1.30 USD a month. Plus currency conversion, plus GST. So probably closer to $2 AUD.

More info here: https://aws.amazon.com/rekognition/pricing/

Conclusion

Overall, Amazon Rekognition is a handy tool which can be used to interpret images and produce a list of keywords with confidence scores. The pricing is quite good considering the small amount of effort required to use the service, however the results are very ‘generic’ and don’t really add a lot to the images in this situation. The text (aka OCR) interpretation model seems a lot better (at least for images with text in them!) and would be a lot more useful in some scenarios.

It would be good to spend more time to have a look at a broader range of images and judge the effectiveness. But for the moment, I think that using Rekognition to produce “better” alt tags is probably not the perfect option: it produces some sort of alt tag, which is probably better than no alt tag, but is certainly nothing on hand-crafted alt tags.

Outside of some SEO benefits, having the alt text on all images automatically does seem like a nice thing to do when it comes to accessibility. I haven’t tried a screen reader but I know it’s quite tricky to be compliant in this respect. This makes me wonder if it’s worth doing in websites piecemeal (as described here) or if it would be better if accessibility tools had this feature built-in. That could get expensive, though, if images with missing alt text are analysed as you browse, even if you stored the results with a hash.

More information on Amazon Rekognition is available here and for those using Wordpress, there is a plugin.

Like this post? Subscribe to my RSS Feed or

Comments are closed